Containerizing and deploying a machine learning model as a service [WIP]

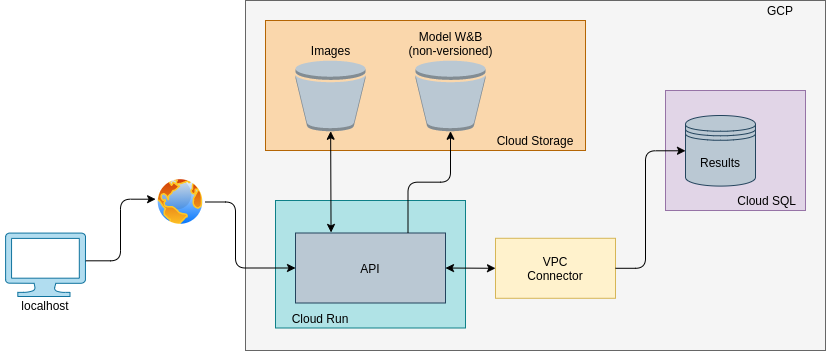

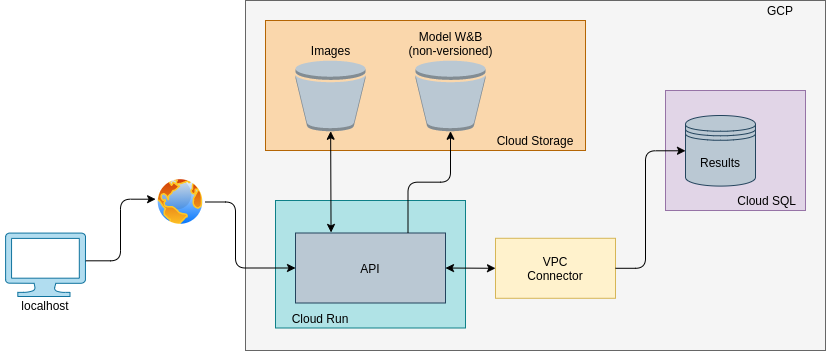

One of the neatest things about Machine Learning projects is to be able to serve them as a service whether on-premise or cloud. When comes to deploy a containerized application on a fully managed serverless platform there is an affordable option (free up to 2M request/month) called Cloud Run. The usual workflow consists of two parts: first submitting(and versioning) your app’s container, and finally deploy it to the platform. The following shell script shows how to do it.